Fostering responsible AI in health care

With the right policies and partnerships, artificial intelligence can lead to higher-quality, more equitable care.

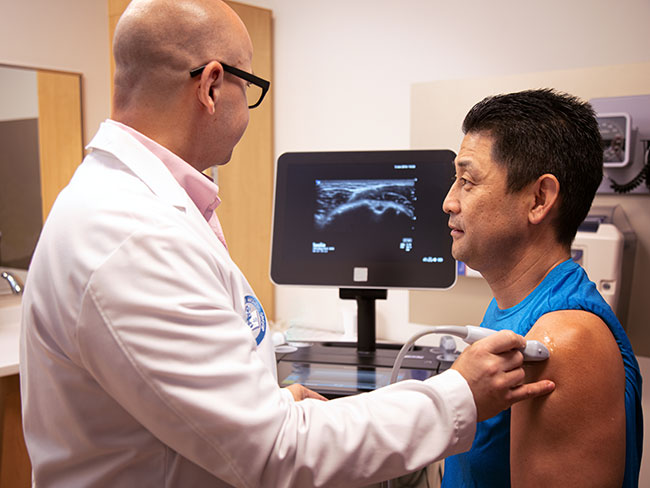

Health care providers use AI to improve patient care

Contributed by Daniel Yang, MD, Vice President, Artificial Intelligence and Emerging Technologies

Across the U.S., many organizations are realizing the transformative potential of artificial intelligence.

In health care, AI presents opportunities to improve patient outcomes and reduce health disparities. It can support care teams and enable more personalized health care experiences.

But health care leaders must understand and address risks to ensure AI is used safely and equitably. These risks include flawed algorithms, unsatisfying patient experiences, and privacy concerns.

At Kaiser Permanente, we’re taking a thoughtful approach to AI. AI tools alone don't save lives or improve the health of our members, they enable our physicians and care teams to provide high-quality, equitable care.

How Kaiser Permanente uses AI

The health care industry generates almost 30% of all data in the world.

Artificial intelligence enables computers to learn and solve problems using a variety of data sources, including medical images, audio, and text. The insights generated from AI can support our physicians and employees in enhancing the care of our patients.

For example, a Kaiser Permanente program called Advance Alert Monitor uses AI and helps prevent emergencies in the hospital before they happen. Every hour, the program automatically analyzes hospital patients’ electronic health data. If the program identifies a patient at risk of serious decline, it sends an alert to a specialized virtual quality nursing team. The nursing team reviews the data to determine what level of on-site intervention is needed.

This program is currently in use at 21 Kaiser Permanente hospitals across Northern California. A rigorous evaluation found that the program saves an estimated 500 lives per year.

The path to responsible AI

At Kaiser Permanente, AI tools must drive our core mission of delivering high-quality and affordable care for our members. This means that AI technologies must demonstrate a "return on health," such as improved patient outcomes and experiences.

We evaluate AI tools for safety, effectiveness, accuracy, and equity. Kaiser Permanente is fortunate to have one of the most comprehensive datasets in the country, thanks to our diverse membership base and powerful electronic health record system. We can use this anonymized data to develop and test our AI tools before we ever deploy them for our patients, care providers, and communities.

We are careful to make sure that the AI tools we use support the delivery of equitable, evidence-based care for our members and communities. We do this by testing and validating the accuracy of AI tools across our diverse populations. We are also working to develop and deploy AI tools that can help us identify and proactively address the health and social needs of our members. This can lead to more equitable health outcomes.

Finally, once a new AI tool is implemented, we continuously monitor its outcomes to ensure it is working as intended. We stay vigilant; AI technology is rapidly advancing, and its applications are constantly changing.

Policymakers can help set guardrails

While Kaiser Permanente and other leading health care organizations work to advance responsible AI, policymakers have a role to play too. We encourage action in the following areas:

- National AI oversight framework — An oversight framework should provide an overarching structure for guidelines, standards, and tools. It should be flexible and adaptable to keep pace with rapidly evolving technology. New breakthroughs in AI are occurring monthly.

- Standards governing AI in health care — Policymakers should work with health care leaders to develop national, industry-specific standards to govern the use, development, and ethics of AI in health care. By working closely with health care leaders, policymakers can establish standards that are effective, useful, timely, and not overly prescriptive. This is important because standards that are too rigid can stifle innovation, which would limit the ability of patients and providers to experience the many benefits AI tools could help deliver.

Guardrails: Progress so far

The National Academy of Medicine convened a steering committee to establish a Health Care AI Code of Conduct that draws from health care and technology experts, including Kaiser Permanente. This is a promising start to developing an oversight framework.

In addition, Kaiser Permanente appreciates the opportunity to be an inaugural member of the U.S. AI Safety Institute Consortium. The consortium is a multisector work group setting safety standards for the development and use of AI, with a commitment to protecting innovation.

Considerations for policymakers

As policymakers develop AI standards, we urge them to keep a few important points top of mind.

- Lack of coordination creates confusion. Government bodies should coordinate at the federal and state levels to ensure AI standards are consistent and not duplicative or conflicting.

- Standards need to be adaptable. As health care organizations continue to explore new ways to improve patient care, it is important for them to work with regulators and policymakers to make sure standards can be adapted by organizations of all sizes and levels of sophistication and infrastructure. This will allow all patients to benefit from AI technologies while also being protected from potential harm.

AI has enormous potential to help make our nation’s health care system more robust, accessible, efficient, and equitable. At Kaiser Permanente, we’re excited about AI’s future, and are eager to work with policymakers and other health care leaders to ensure all patients can benefit.

-

Social Share

- Share Fostering Responsible AI in Health Care on Pinterest

- Share Fostering Responsible AI in Health Care on LinkedIn

- Share Fostering Responsible AI in Health Care on Twitter

- Share Fostering Responsible AI in Health Care on Facebook

- Print Fostering Responsible AI in Health Care

- Email Fostering Responsible AI in Health Care

December 15, 2025

‘Free’ drug samples aren’t really free

Pharmaceutical marketing hurts patient care and drives up costs. At Kaiser …

December 9, 2025

Buprenorphine saves lives. Why can’t more patients get it?

Policy changes are crucial for better opioid addiction treatment.

November 19, 2025

Will AI rules leave small hospitals behind?

Policymakers can design regulations that protect patients and work for …

October 21, 2025

Health coverage is key to early breast cancer detection

Timely screenings save lives and lower costs, but millions of people miss …

September 5, 2025

Congress must act to keep health insurance affordable

Enhanced premium tax credits help millions of people afford health insurance. …

August 5, 2025

Pharmaceutical marketing hurts patient care

At Kaiser Permanente, our doctors and pharmacists work together to ensure …

July 22, 2025

Mental health care without borders

When clinicians can practice across state lines, more people can get the …

June 17, 2025

We must grow the health care workforce

At Kaiser Permanente, we educate future clinicians and offer programs that …

May 21, 2025

Trust unlocks AI’s potential in health care

Artificial intelligence can improve health care by reducing administrative …

April 21, 2025

Congress must protect Medicaid and insurance tax credits

Medicaid and tax credits for acquiring coverage are essential for patients, …

March 25, 2025

AI in health care: 7 principles of responsible use

These guidelines ensure we use artificial intelligence tools that are safe …

March 24, 2025

Our nation's health depends on coverage

Health insurance is key to a strong country — it improves health and boosts …

February 20, 2025

Our nation’s health suffers if Congress cuts Medicaid

Reducing Medicaid funding will lead to worse health outcomes, overburden …

January 15, 2025

Why the U.S. needs more community health workers

With the right strategies and public policies, we can strengthen our nation’s …

December 10, 2024

Accelerating growth in the mental health care workforce

Actions policymakers can take to grow and diversify the mental health care …

November 11, 2024

Medicare telehealth flexibilities should be here to stay

We urge Congress to extend policies that have improved access to care and …

September 19, 2024

First look at new Lakewood facilities

New medical offices will enhance the health care experience for members …

September 16, 2024

Voting affects the health of our communities

In honor of National Voter Registration Day, we encourage everyone who …

July 22, 2024

Our nation’s health depends on well-funded research

Advanced medical science improves patient outcomes. We urge lawmakers to …

July 2, 2024

Reducing cultural barriers to food security

To reduce barriers, Food Bank of the Rockies’ Culturally Responsive Food …

June 28, 2024

Health Action Summit highlights mental health opportunities

The Kaiser Permanente Colorado Health Action Summit gathered nonprofits, …

June 3, 2024

A call to ‘Connect’ for cancer prevention research

Participate in a study to help uncover the causes of cancer and how to …

May 7, 2024

Can the badly broken prescription drug market be fixed?

Prescription drugs are unaffordable for millions of people. With the right …

April 12, 2024

It’s time to address America’s Black maternal health crisis

Health care leaders and policymakers should each play their part to help …

February 12, 2024

Proposition 1 would bolster mental health care in California

Kaiser Permanente supports the ballot measure to expand and improve mental …

January 31, 2024

Prioritizing policies for health and well-being in Colorado

CityHealth’s 2023 Annual Policy Assessment awards cities for their policies …

January 22, 2024

Solutions for strengthening the mental health care workforce

Better public policies can help address the challenges. We encourage policymakers …

December 20, 2023

Funding solutions to end gun violence

Researchers and organizations are exploring inventive ways to reduce gun …

December 20, 2023

Research transforms care for people with multiple sclerosis

Our researchers are leading the way to more effective, affordable, and …

December 15, 2023

Climate change is already affecting our health

The health care industry is responsible for 8% to 10% of harmful emissions …

December 6, 2023

Leaders named among health care’s most influential

Greg A. Adams; Maria Ansari, MD, FACC; and Ramin Davidoff, MD, have been …

November 13, 2023

Congress must act to address drug shortages

Kaiser Permanente is working to address drug shortages and support policies …

October 23, 2023

The future of health care is digital

Nari Gopala, Kaiser Permanente’s chief digital officer, answers 3 questions …

October 4, 2023

An easier way to manage multiple prescriptions

If you have an ongoing health condition, you know it can be tricky to keep …

September 27, 2023

10 school districts receive next round of RISE grants

The Thriving Schools program helps educators and students in Colorado integrate …

September 13, 2023

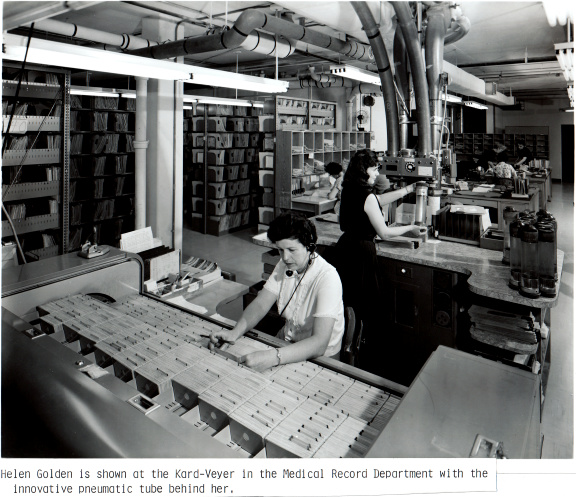

Transforming the medical record

Kaiser Permanente’s adoption of disruptive technology in the 1970s sparked …

September 6, 2023

Advancing mental health crisis care through public policy

Organizations that provide public mental health crisis services must work …

July 11, 2023

Our prescription for safe, effective, more affordable drugs

Our approaches ensure effectiveness and safety, and drive cost savings. …

June 1, 2023

Policy recommendations from a mental health therapist in training

Changing my career and becoming a therapist revealed ways our country can …

April 25, 2023

Hannah Peters, MD, provides essential care to ‘Rosies’

When thousands of women industrial workers, often called “Rosies,” joined …

April 11, 2023

Collaboration is key to keeping people insured

With the COVID-19 public health emergency ending, states, community organizations, …

January 17, 2023

Lawmakers must act to boost telehealth and digital equity

Making key pandemic-era telehealth policies permanent and ensuring more …

November 8, 2022

Protecting access to medical care for legal immigrants

A statement of support from Kaiser Permanente chair and CEO Greg A. Adams …

October 21, 2022

Kaiser Permanente therapists ratify new contract

New 4-year agreement with NUHW will enable greater collaboration aimed …

October 6, 2022

We’re a Fast Company Innovation by Design winner

Kaiser Permanente is the first health care organization to win Design Company …

October 1, 2022

Innovation and research

Learn about our rich legacy of scientific research that spurred revolutionary …

August 16, 2022

Our support for the Inflation Reduction Act

A statement from chair and chief executive Greg A. Adams on the importance …

May 2, 2022

How to transform mental health care: Follow the research

We applaud President Biden and Congress as they begin to set policies that …

March 22, 2022

NUHW psych-social employees ratify agreement

The agreement between Kaiser Permanente and the NUHW in Southern California …

March 22, 2022

Our commitment to equity and our LGBTQIA+ communities

A statement from chair and chief executive officer Greg A. Adams.

October 12, 2021

Beyond advocacy: Requiring vaccination to stop COVID-19

Kaiser Permanente and other leading companies are mandating COVID-19 shots …

September 10, 2021

‘Baby in the drawer’ helped turn the tide for breastfeeding

This innovation in rooming-in allowed newborns to stay close to mothers …

July 7, 2021

Achieving health equity

Equal medical care is not enough to end disparities in health outcomes.

April 27, 2021

Health data privacy

Protecting our members’ personal health information

April 23, 2021

Medicaid

Delivering high-quality Medicaid coverage and services

September 28, 2020

A legacy of disruptive innovation

Proceeds from a new book detailing the history of the Kaiser Foundation …

August 26, 2020

Kaiser Permanente’s pioneering nurse-midwives

The 1970s nurse-midwife movement transformed delivery practices.

April 27, 2020

Health care reform

Affordable, accessible health care and coverage

March 4, 2020

Our impact

How Kaiser Permanente strengthens communities and advances public policies …

March 1, 2020

Prescription drug pricing

Bringing down the high cost of medication

February 29, 2020

California

February 1, 2020

Georgia

February 1, 2020

Washington

February 1, 2020

Hawaii

February 1, 2020

Colorado

August 2, 2019

Thriving with 1960s-launched KFOG radio

Kaiser Broadcasting radio connected listeners, while TV stations brought …

June 5, 2019

Breaking LGBT barriers for Kaiser Permanente employees

“We managed to ultimately break through that barrier.” — Kaiser Permanente …

February 5, 2019

Mobile clinics: 'Health on wheels'

Kaiser Permanente mobile health vehicles brought care to people, closing …

April 30, 2018

Nursing pioneers leads to a legacy of leadership

Kaiser Foundation School of Nursing students learned a new philosophy emphasizing …

April 19, 2018

Wasting nothing: Recycling then and now

Environmentalism was a common practice at the Kaiser shipyards long before …

April 12, 2018

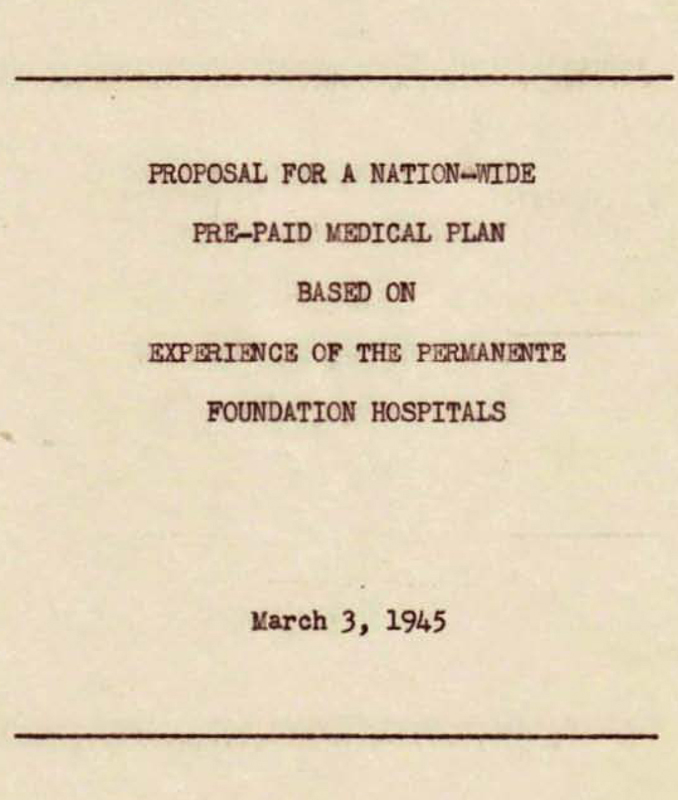

Harold Hatch, health insurance visionary

The founding of Kaiser Permanente's concept of prepaid health care in the …

March 26, 2018

5 physicians who made a difference

Meet 5 outstanding doctors who advanced the practice of medical care with …

December 19, 2017

From boats to books: A history of Kaiser Permanente’s medical libraries

Kaiser Permanente librarians are vital in helping clinicians remain updated …

November 7, 2017

Patriot in pinstripes: Honoring veterans, home front, and peace

Henry J. Kaiser's commitment to the diverse workforce on the home front …

October 12, 2017

An experiment named Fabiola

Health care takes root in Oakland, California.

August 15, 2017

Sidney R. Garfield, MD, on medical care as a right

Hear Kaiser Permanente’s physician co-founder talk about what he learned …

August 10, 2017

‘Good medicine brought within reach of all'

Paul de Kruif, microbiologist and writer, provides early accounts of Kaiser …

July 14, 2017

Kaiser’s role in building an accessible transit system

Harold Willson, an employee, and an advocate for accessible transportation, …

July 7, 2017

Mending bodies and minds — Kabat-Kaiser Vallejo

The expanded new location provided care to a greater population of members …

June 23, 2017

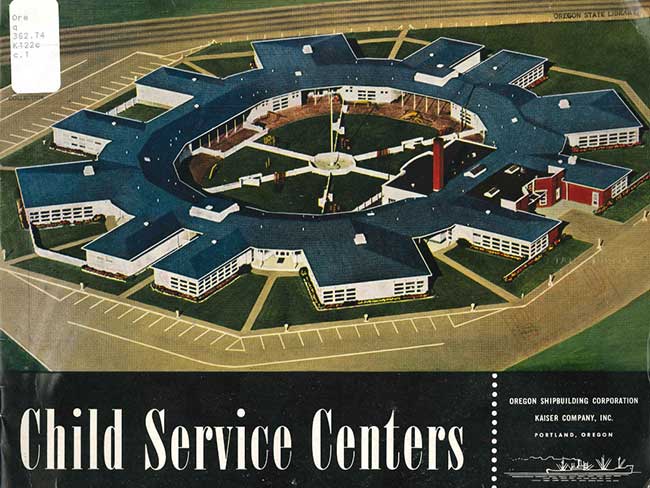

No getting round it: An innovative approach to building design

Kaiser Permanente incorporated innovative circular architectural designs …

June 14, 2017

Kabat-Kaiser: Improving quality of life through rehabilitation

When polio epidemics erupted, pioneering treatments by Dr. Herman Kabat …

June 9, 2017

Edmund (Ted) Van Brunt, pioneer of electronic health records, dies at age …

Throughout his career, Dr. Van Brunt applied computers and databases in …

May 4, 2017

How a Kaiser Permanente nurse transformed health education

Kaiser Permanente's Health Education Research Center and Health Education …

March 1, 2017

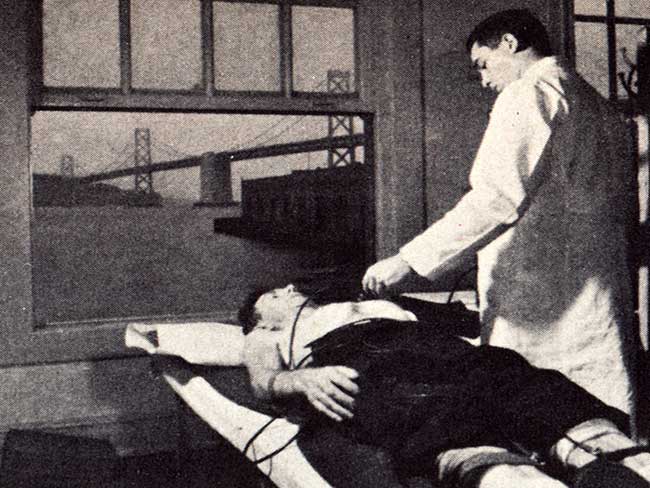

Screening for better health: Medical care as a right

When industrial workers joined the health plan, an integrated battery of …

February 17, 2017

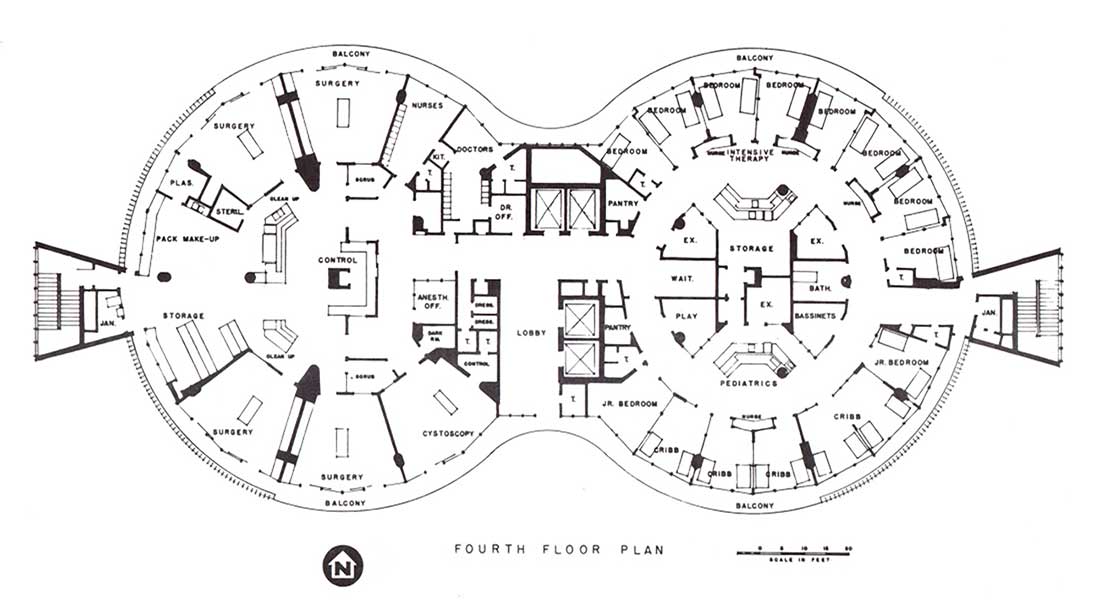

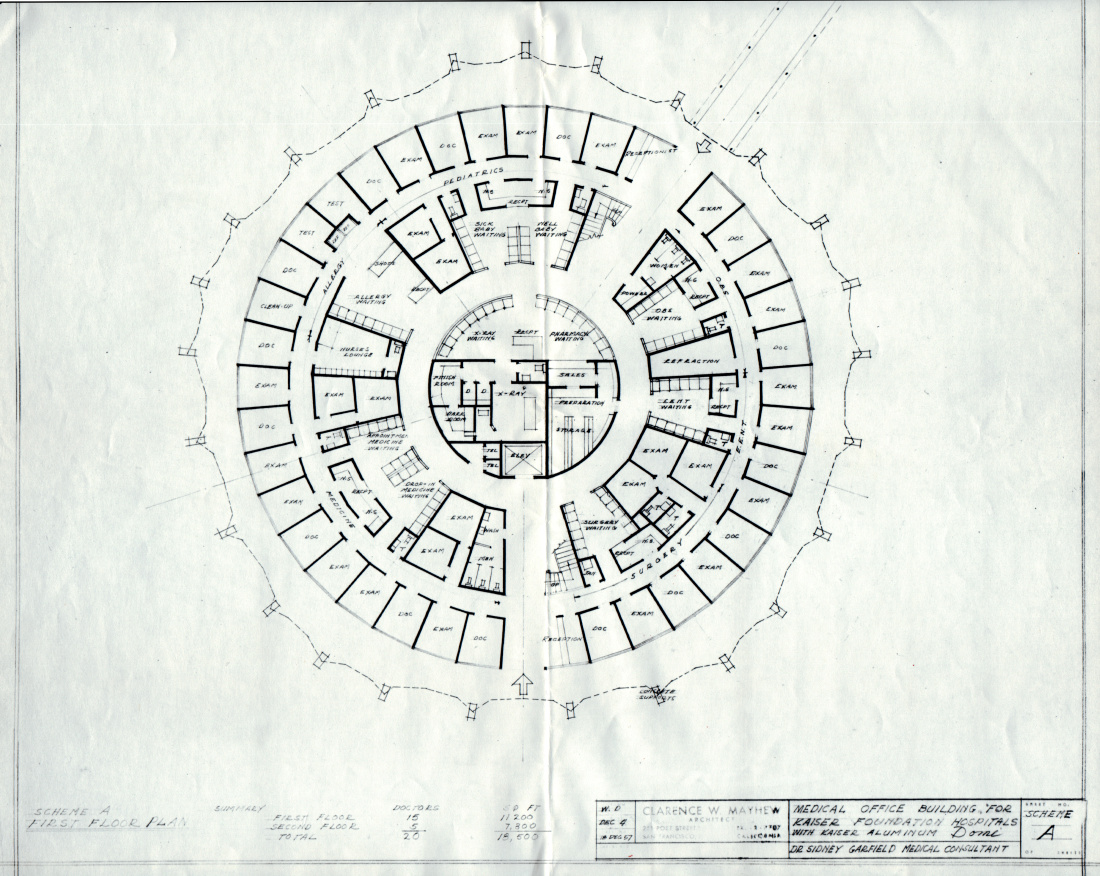

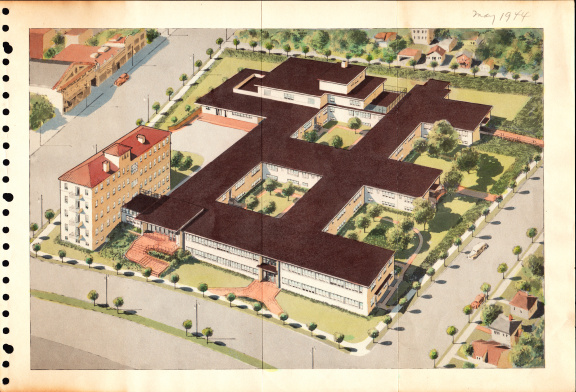

Experiments in radial hospital design

The 1960s represented a bold step in medical office architecture around …

October 12, 2016

Kaiser’s geodesic dome clinic

There are hospital rounds, and there are round hospitals.

April 20, 2016

Henry J. Kaiser’s environmental stewardship

Since the 1940s, Kaiser Industries and Kaiser Permanente have a long history …

November 13, 2015

Dr. Morris Collen’s last book on medical informatics

The last published work of Morris F. Collen, MD, one of Kaiser Permanente’s …

October 29, 2015

From paper to pixels — the new paradigm of electronic medical records

Transitioning to electronic health records introduced new approaches, skills, …

September 23, 2015

Kaiser Permanente and NASA — taking telemedicine out of this world

Kaiser Permanente International designs, develop, and test a remote health …

July 22, 2015

Kaiser Permanente as a national model for care

Kaiser Permanente proposed a revolutionary national health care model after …

December 11, 2014

Henry J. Kaiser, geodesic dome pioneer

October 8, 2014

Breast cancer isn’t just a woman’s issue

July 23, 2014

Kaiser shipyards pioneered use of wonder drug penicillin

Though supplies for civilians were limited, Dr. Morris Collen’s wartime …

June 24, 2014

Kaiser Permanente's first hospital changes and grows

A collection of vintage photos that chronicle the evolution of Oakland …

September 23, 2013

Kaiser Permanente pioneered solar power in health facilities in 1980

Santa Clara Medical Center hosted a solar panel project in 1979 to demonstrate …

September 19, 2013

Kaiser’s postwar suburbs designed for pedestrian safety and fitness

Model neighborhoods close to jobs and laid out with meandering lanes and …

July 9, 2013

Kaiser Permanente Web presence rooted in past

The first Kaiser Permanente website launched in 1996, creating a new way …

March 6, 2013

Decades of health records fuel Kaiser Permanente research

Over 50 years of early Kaiser Permanente electronic health records since …

October 23, 2012

Disabled Kaiser Permanente employee changed course of public transportation

In the 1960s, Harold Willson successfully advocated for the historically …